It’s been a while since I’ve done much tinkering with my home servers – frankly because I’ve been pretty satisfied how they’ve been running as-is!

I think it was back in 2018 that I migrated the first half of my setup to a rackmount (old enterprise) hardware, with Plex and everything else running under virtualization for the first time; and then in 2019 I added 4U of NAS boxes to move all of my media and put running on desktop hardware to an end.

For the most part, it’s been great – I’ve got a couple of beefy batteries to help endure blips with the power, and Unraid is so much better at managing disks than me shuffling stuff from one external hard drive to another … particularly when you’re looking at upwards of a dozen disks…

I actually believe I’m running 15 now and I’ll probably add more if I can find a good sale in a few months for Black Friday, but regardless…

The limitation of using old enterprise hardware is that there’s only so much you can do to upgrade them. Dell considers all of the machines that I’m running to be 11th generation, whereas currently they’re on 15th generation, so when it comes to things like processor upgrades and even drive support, there’s not a ton of wiggle room.

Not a big deal, considering that as I said they’re running pretty smoothly, but still … I guess you could say I got bored and felt like seeing what I could tweak anyways? 😛

About a month ago, I settled on three relatively simple upgrades that I could do to bulk my main server that runs my VMs up a bit to at least help prolong until I’m able to replace it with a new build altogether…

- New CPUs – This sounds like a big endeavor, but I literally found a pair of matched CPUs on eBay for like $40.

- Add SSDs – This is the one I just finished, and it wasn’t as easy as I had assumed!

- 10 gigabit ethernet – I’ll do this one later on this fall … I don’t have a 10-gig switch yet, so I figured in the short term I could run 10-gig just between the two servers and at least see some speed boosts there.

- Graphics Card for Plex Transcoding – Probably my last update because A) the card alone runs about $500, and B) I have to do some other mods like replacing a riser in the case just to get the thing to fit. Still, this will be the biggest impact because it should render all Plex transcoding a walk in the park…

Swapping out the CPUs probably made me the most nervous – I mean, you’re basically doing brain surgery on your computer – but aside from a brief scare where I couldn’t find my thermal paste, but the replacement went super smoothly and it booted up no problem with the new chips a few minutes later!

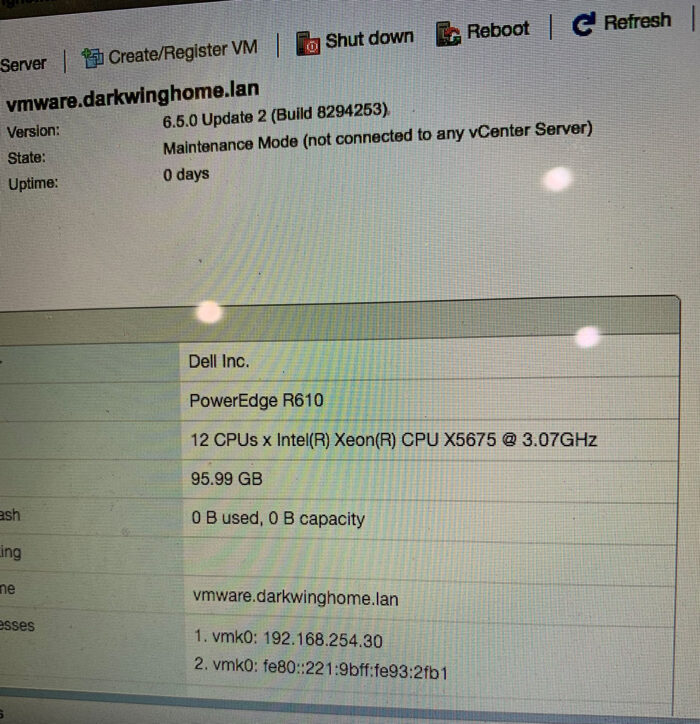

I went from 4 cores each running 2.5 Ghz (16 cores total w/hyperthreading) to 6 cores each running at 3 Ghz (24 cores total), which will basically give me a little more overhead for Plex transcoding while I wait to add a real graphics card.

And now on to today’s adventure, or should we say last week’s adventure???

My Plex libraries have gotten pretty massive – think thousands of movies and TV shows across over 100 TB of media – and one recommendation that I’ve heard to improve performance as you grow bigger is to move your metadata and database over to an SSD. I guess it’s not so much the interface speed itself, but the IOPS because with the metadata you’re dealing with tons of tiny files with all of the images.

Anyways, I originally wanted to go nVME because I understand they’re by far the fastest drives available right now. Unfortunately, there was no good way to get them into my system. Sure, I could buy a card to add them, but RAID wouldn’t be supported and I’ve come to appreciate the redundancy because I know how easy it is for drives to fail…

So instead I went with just regular, old SSDs because I could fit them right into my normal drive bays and then my existing RAID controller could just manage them like normal. Easy, right?!

Well, apparently the PERC6i RAID controller that I had in my Dell R610 doesn’t like SSDs, or at least doesn’t like Western Digital Red SSDs, because it would detect the drives at startup but refuse to do anything with them, reporting failures.

I tried swapping drive bays, thinking it was a bad cable.

I tried upgrading the firmware for the card, which OMG took forever because the server is so old that the online firmware update process no longer works.

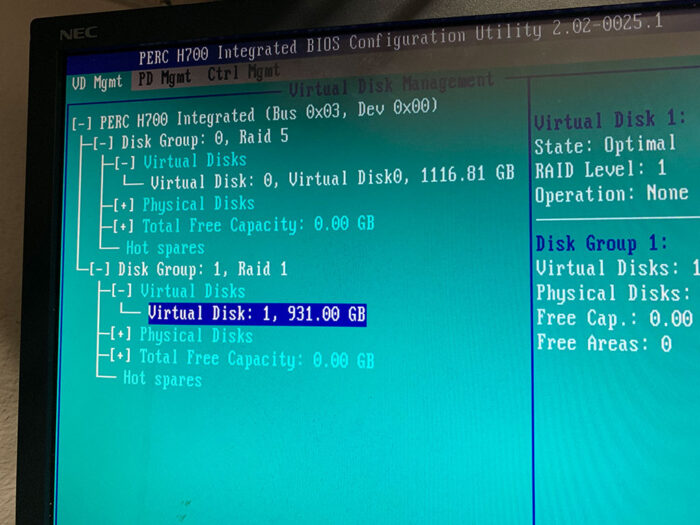

Finally I decided to try replacing the card altogether because I knew that the PERC6i couldn’t use larger drives, either … not a problem in this case, but still. I ordered an H700 off of eBay instead and waited a week, then waited another week while I tried to figure out how to backup all of my VMs just in case!

As of now, everything is up and running as expected, so luckily I didn’t end up needing those backups, but it sure was close for a minute this afternoon…

Installing the card itself was pretty easy – thanks to this video which walks through the whole process…

That said, the cables that shipped with the card that I bought weren’t long enough to route through my case correctly, so now it won’t close! I have replacements coming in a couple of days that cost me another $20 on top of the $40 I spent for the card, cables, and new battery.

Once I booted up the new card, the new SSDs showed up immediately as Online!

Not so much for the existing drives, but that was an error on my part because I missed a prompt to “Import the Foreign Array”. A second reboot to correct that showed everything and a few minutes later I was initializing the new array and doing a background initialization on the old array which finished by the time I was done with lunch.

But we weren’t out of the woods yet!

Upon booting back into ESXi, it too saw the new SSDs and I was able to create my new datastore where I wanted to move my Plex VM to … but the old array was nowhere in sight and as a result, it thought that all of my existing VMs were invalid.

That sort of made sense because I figured that the identifiers for the drives probably changed with the introduction of the new card. After a healthy amount of digging, I found that the trick was to SSH into ESXi itself and then do this…

esxcli storage vmfs snapshot list

esxcli storage vmfs snapshot mount -n -l "SNAPSHOT_NAME"

The first command confirmed that my datastore still existed and the second one mounted it so that ESXi would recognize it as usual. (this link explains it all)

From here it was simply a matter of unregistering each VM, moving them one by one to the new SSD datastore, and then re-registering them as existing VMs. Apparently the one downside of doing it this way is that the disk provisioning type for each VM changes from thin to thick, which means they take up their full disk allocation instead of only what they’re using at the time … but I’m really only using the new SSD datastore for two VMs and they’ll both probably get rebuilt eventually for OS upgrades anyways, so it wasn’t worth the hassle with vCenter/vSphere/whatever that I honestly don’t understand yet anyways.

They’re moved, they’re running again, and we’re done!

Now can I tell much of a difference???

I was hoping to see faster download speeds on the one VM, but not so much there.

For Plex itself, the interface both on my laptop, phone, and a TV locally as well as my phone over cellular data does seem a lot snappier. Artwork seems to pop onto the screen in maybe a second per page on the TV when it would take a couple to browse through pages of large libraries, so that’s a win in my book.

And again, I wasn’t exactly expecting huge gains with any of these, but aside from pounding my head against the wall for the last week about the stupid RAID card, both were fun, little upgrades that added incremental gains to the performance, so that’s cool in my book.

Next up will likely be the 10-gig upgrade because I know what parts I need and just need to get them ordered. The goal is to string the two servers together for starters, and then maybe add a 10-gig switch next year along the same time I upgrade to a new Macbook that can also support 10-gig, too!

My longer term goal, I’ve decided, is going to be to eventually build new servers myself to replace all of these ones. Still rackmount, but I want to do them myself because new enterprise gear is ridiculously expensive and I think I’m comfortable enough with it now to go at it alone. I’ve gotten a lot of inspiration from watching Linus Tech Tips and the evolution of their own server room at their office, so ideally it would wait until we build a new house in a couple of years where I can:

- Install a full rack in a dedicated room (that isn’t our bedroom closet!)

- Build two identical servers so that I can upgrade to a cluster to make it easier to make changes without taking Plex down

- Build a replacement NAS, probably in a single 4U case

- Along with a matching backup NAS

As you can see, it’ll be a big endeavor that will take some time to put together, but I’m in no rush and I think my current rig will keep us moving forward just fine until then.

We’ll see if the other two upgrades are crazy enough to warrant a blog post of their own!